How did things begin?

Back when we were students doing research, we realized that GPU-accelerated compute was hard to secure. Even if we could find it, we could not afford it. However, not all workloads needed the newest, largest and most expensive GPUs. With thousands of smaller GPUs sitting idle in datacenters and in private clusters, we envisioned a system where one composed these existing hardwares, allowing anyone to run GPU workloads as if they had access to the inaccessible. No longer merely a vision, this is what we are building today.What are you building next?

We have just released our first engine for inference across distributed, heterogeneous GPUs. We are now working on v1, which will feature more parallelism, more throughput and less waiting.How can I get started?

Check out our Quickstart pageI want to follow the developments!

- Our code is open-source! Check out our repositories at Github

- Come join the discussion on Discord

- We sometimes post on Twitter

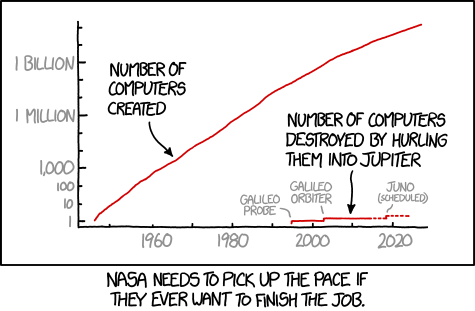

Enjoy an XKCD on us